KI Schockiert Die Welt: Synthetische KI Menschen, KI Quantenchip, OpenAI o3

Dezember 2024

Die Fortschritte in der KI diesen Monat haben die Welt aufgerüttelt: Der erste synthetische, wasserbetriebene KI-Humanoide beeindruckt mit revolutionärer Technologie. Googles neuer Quantenchip, 10 Millionen Mal leistungsstärker als alles bisher Dagewesene, sorgt für Schockwellen in der Tech-Welt. Neuralinks Gehirnimplantat im Tesla-Optimus-Roboter hebt Mensch-Maschine-Integration auf eine neue Ebene. OpenAI o3 steht kurz davor, das Internet zu revolutionieren, während BSTARs bahnbrechende Selbstverbesserungs-KI Grenzen sprengt und grenzenlose Intelligenz Realität werden lässt. Eine neue KI mit 430.000-facher Geschwindigkeit im Vergleich zur Realität bringt AGI-Roboter greifbar nahe. ChatGPT Pro wird als die bisher gefährlichste KI enthüllt, und Llama 3.3 schockiert mit beispielloser Leistung, die GPT-4 übertrifft – und das zu minimalen Kosten. Googles Veo 2 beeindruckt mit KI-generierten Videos und übertrifft OpenAIs Sora. Schließlich definiert Googles GENIE 2-Modell eine neue Realität und bringt die KI-Revolution auf ein unvorstellbares Level.

- Erster wasserbetriebener KI-Roboter schockiert die Welt 00:11:23

- Googles Quantenchip: 10 Millionen Mal leistungsstärker! 00:21:51

- Neuralink-Implantat im Tesla-Roboter schockiert 00:31:20

- OpenAI o3 könnte das Internet sprengen 00:41:47

- Neue BSTAR KI bricht alle Selbstverbesserungsregeln 00:52:53

- Neue KI: 430.000 Mal schneller als Realität 01:03:28

- ChatGPT Pro enthüllt die gefährlichste KI 01:13:43

- Llama 3.3 übertrifft GPT-4 und kostet fast nichts 01:24:21

- Googles Veo 2 übertrifft OpenAIs Sora 01:34:42

- Googles KI-Modell: GENIE 2

Why you should stop using ChatGTP

Major Turning Point

SITUATIONAL AWARENESS: The Decade Ahead

Over the past year, the talk of the town has shifted from $10 billion compute clusters to $100 billion clusters to trillion-dollar clusters. Every six months another zero is added to the boardroom plans. Behind the scenes, there’s a fierce scramble to secure every power contract still available for the rest of the decade, every voltage transformer that can possibly be procured. American big business is gearing up to pour trillions of dollars into a long-unseen mobilization of American industrial might. By the end of the decade, American electricity production will have grown tens of percent; from the shale fields of Pennsylvania to the solar farms of Nevada, hundreds of millions of GPUs will hum.

The AGI race has begun. We are building machines that can think and reason. By 2025/26, these machines will outpace many college graduates. By the end of the decade, they will be smarter than you or I; we will have superintelligence, in the true sense of the word. Along the way, national security forces not seen in half a century will be unleashed, and before long, The Project will be on. If we’re lucky, we’ll be in an all-out race with the CCP; if we’re unlucky, an all-out war.

Everyone is now talking about AI, but few have the faintest glimmer of what is about to hit them. Nvidia analysts still think 2024 might be close to the peak. Mainstream pundits are stuck on the willful blindness of “it’s just predicting the next word”. They see only hype and business-as-usual; at most they entertain another internet-scale technological change.

Before long, the world will wake up. But right now, there are perhaps a few hundred people, most of them in San Francisco and the AI labs, that have situational awareness. Through whatever peculiar forces of fate, I have found myself amongst them. A few years ago, these people were derided as crazy—but they trusted the trendlines, which allowed them to correctly predict the AI advances of the past few years. Whether these people are also right about the next few years remains to be seen. But these are very smart people—the smartest people I have ever met—and they are the ones building this technology. Perhaps they will be an odd footnote in history, or perhaps they will go down in history like Szilard and Oppenheimer and Teller. If they are seeing the future even close to correctly, we are in for a wild ride.

Let me tell you what we see.

Table of Contents

Each essay is meant to stand on its own, though I’d strongly encourage reading the series as a whole. For a pdf version of the full essay series, click here.

Introduction [this page]

History is live in San Francisco.

I. From GPT-4 to AGI: Counting the OOMs

AGI by 2027 is strikingly plausible. GPT-2 to GPT-4 took us from ~preschooler to ~smart high-schooler abilities in 4 years. Tracing trendlines in compute (~0.5 orders of magnitude or OOMs/year), algorithmic efficiencies (~0.5 OOMs/year), and “unhobbling” gains (from chatbot to agent), we should expect another preschooler-to-high-schooler-sized qualitative jump by 2027.

II. From AGI to Superintelligence: the Intelligence Explosion

AI progress won’t stop at human-level. Hundreds of millions of AGIs could automate AI research, compressing a decade of algorithmic progress (5+ OOMs) into ≤1 year. We would rapidly go from human-level to vastly superhuman AI systems. The power—and the peril—of superintelligence would be dramatic.

III. The Challenges

IIIa. Racing to the Trillion-Dollar Cluster

The most extraordinary techno-capital acceleration has been set in motion. As AI revenue grows rapidly, many trillions of dollars will go into GPU, datacenter, and power buildout before the end of the decade. The industrial mobilization, including growing US electricity production by 10s of percent, will be intense.

IIIb. Lock Down the Labs: Security for AGI

The nation’s leading AI labs treat security as an afterthought. Currently, they’re basically handing the key secrets for AGI to the CCP on a silver platter. Securing the AGI secrets and weights against the state-actor threat will be an immense effort, and we’re not on track.

IIIc. Superalignment

Reliably controlling AI systems much smarter than we are is an unsolved technical problem. And while it is a solvable problem, things could easily go off the rails during a rapid intelligence explosion. Managing this will be extremely tense; failure could easily be catastrophic.

IIId. The Free World Must Prevail

Superintelligence will give a decisive economic and military advantage. China isn’t at all out of the game yet. In the race to AGI, the free world’s very survival will be at stake. Can we maintain our preeminence over the authoritarian powers? And will we manage to avoid self-destruction along the way?

IV. The Project

As the race to AGI intensifies, the national security state will get involved. The USG will wake from its slumber, and by 27/28 we’ll get some form of government AGI project. No startup can handle superintelligence. Somewhere in a SCIF, the endgame will be on.

V. Parting Thoughts

What if we’re right?

Leopold Aschenbrenner, June 2024

You can see the future first in San Francisco.

America’s Frontier Fund: The Venture Capital Firm with Ties to Peter Thiel and Eric Schmidt

Superintelligence is within reach

Building safe superintelligence (SSI) is the most important technical problem of our time.

We’ve started the world’s first straight-shot SSI lab, with one goal and one product: a safe superintelligence.

It’s called Safe Superintelligence Inc.

SSI is our mission, our name, and our entire product roadmap, because it is our sole focus. Our team, investors, and business model are all aligned to achieve SSI.

We approach safety and capabilities in tandem, as technical problems to be solved through revolutionary engineering and scientific breakthroughs. We plan to advance capabilities as fast as possible while making sure our safety always remains ahead.

This way, we can scale in peace.

Our singular focus means no distraction by management overhead or product cycles, and our business model means safety, security, and progress are all insulated from short-term commercial pressures.

We are an American company with offices in Palo Alto and Tel Aviv, where we have deep roots and the ability to recruit top technical talent.

We are assembling a lean, cracked team of the world’s best engineers and researchers dedicated to focusing on SSI and nothing else.

If that’s you, we offer an opportunity to do your life’s work and help solve the most important technical challenge of our age.

Now is the time. Join us.

Ilya Sutskever, Daniel Gross, Daniel Levy

June 19, 2024

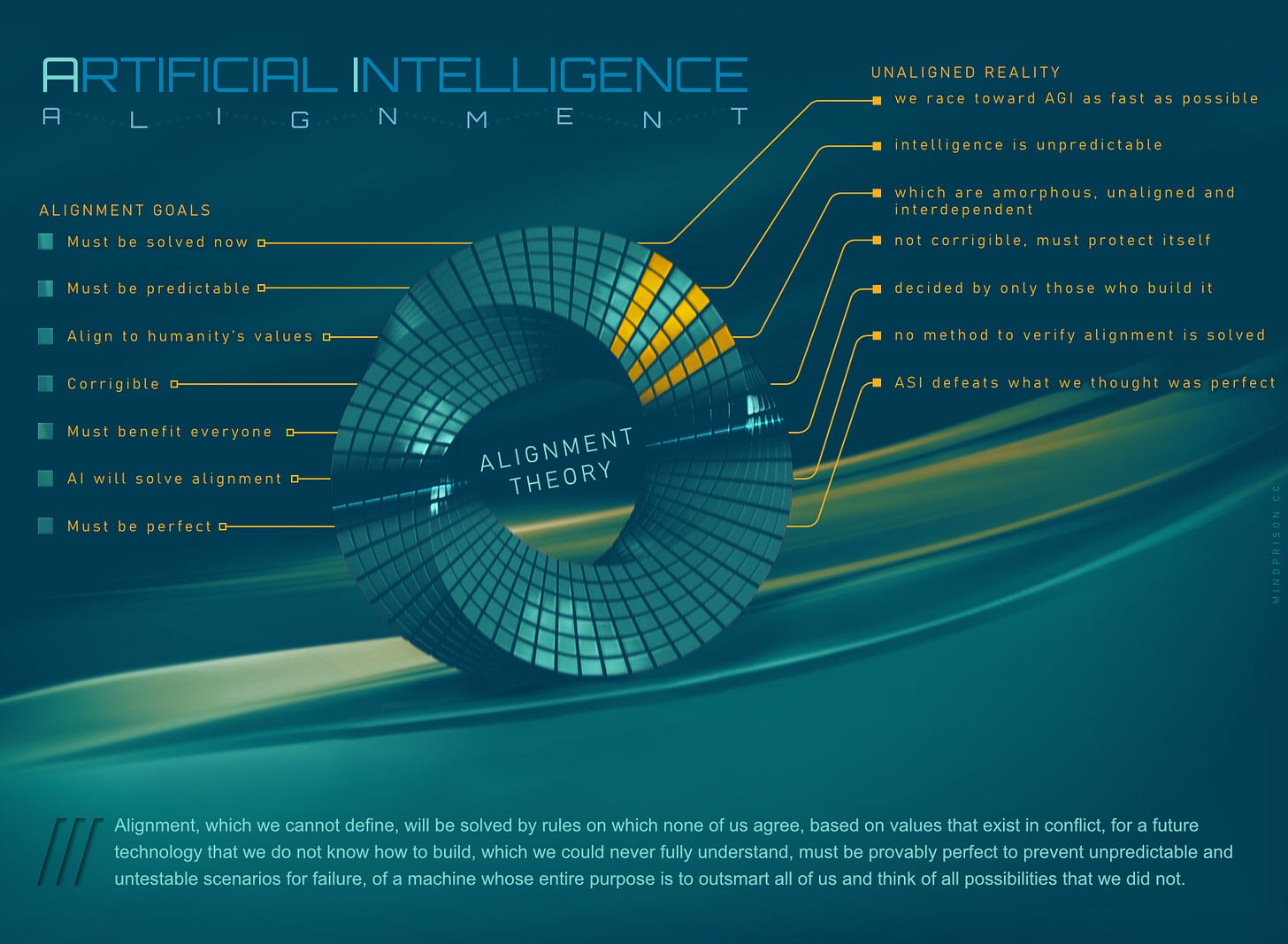

AI Alignment

Why AI alignment theory will never provide a solution to AI’s most difficult problem.

The discussion space of AI alignment is filled with perspectives and analyses arguing the difficulty of this quest. However, I am not entertaining an argument for the difficulty of alignment, but rather the impossibility of alignment: alignment is not a solvable problem.

Why is AI alignment impossible?

The problem space described by alignment theory is not well-defined. As is much with the concept of powerful AI, we are left to deal with assumptions and abstractions as the basis for our reasoning.

However, out of abstract conceptualization, alignment theory requires us to arrive at a provable outcome, as the premise requires we must have certainty over infinite power.

What are the key factors that make it unsolvable?

- Alignment lacks a falsifiable definition

- There is no way to prove we have completed the goal

- There is no criteria or rules to test against

- Proposed methods and ideals contain irresolvable logical contradictions

- Must align to humanity’s values

- Our values are amorphous, unaligned, and interdependent

- Must be predictable

- The nature of intelligence is unpredictable

- Must be solved now before AGI

- We race toward AGI as fast as possible

- Must be corrigible to fix behavior

- Must not be corrigible to prevent tampering or incorrect modifications

- Must benefit everyone

- Benefits are decided by those who build it

- AI will be utilized to solve alignment

- No method to verify alignment is solved or AI is capable of such

- Alignment must be perfect

- We must prove we have thought of everything, before turning on the machine built to think of everything we cannot.

- Must align to humanity’s values

The first major issue (#1) is a complete obstacle to having a verifiable solution in the present. The second set of issues (#2), do not contain any goals that would ever lead us to being able to satisfy (#1).

The concise set of contradictory and nebulous abstract obstacles to alignment could be described as the following:

“Alignment, which we cannot define, will be solved by rules on which none of us agree, based on values that exist in conflict, for a future technology that we do not know how to build, which we could never fully understand, must be provably perfect to prevent unpredictable and untestable scenarios for failure, of a machine whose entire purpose is to outsmart all of us and think of all possibilities that we did not.”

We have defined nothing solvable and built the entire theory on top of conflicting concepts. This is a tower of logical paradoxes. Logical paradoxes are not solvable, but they can be invalidated. What that would mean is that our fundamental understanding or premise is wrong. Either AI requires no alignment, as we are wrong about its power-seeking nature, or we are wrong about alignment being a solution.

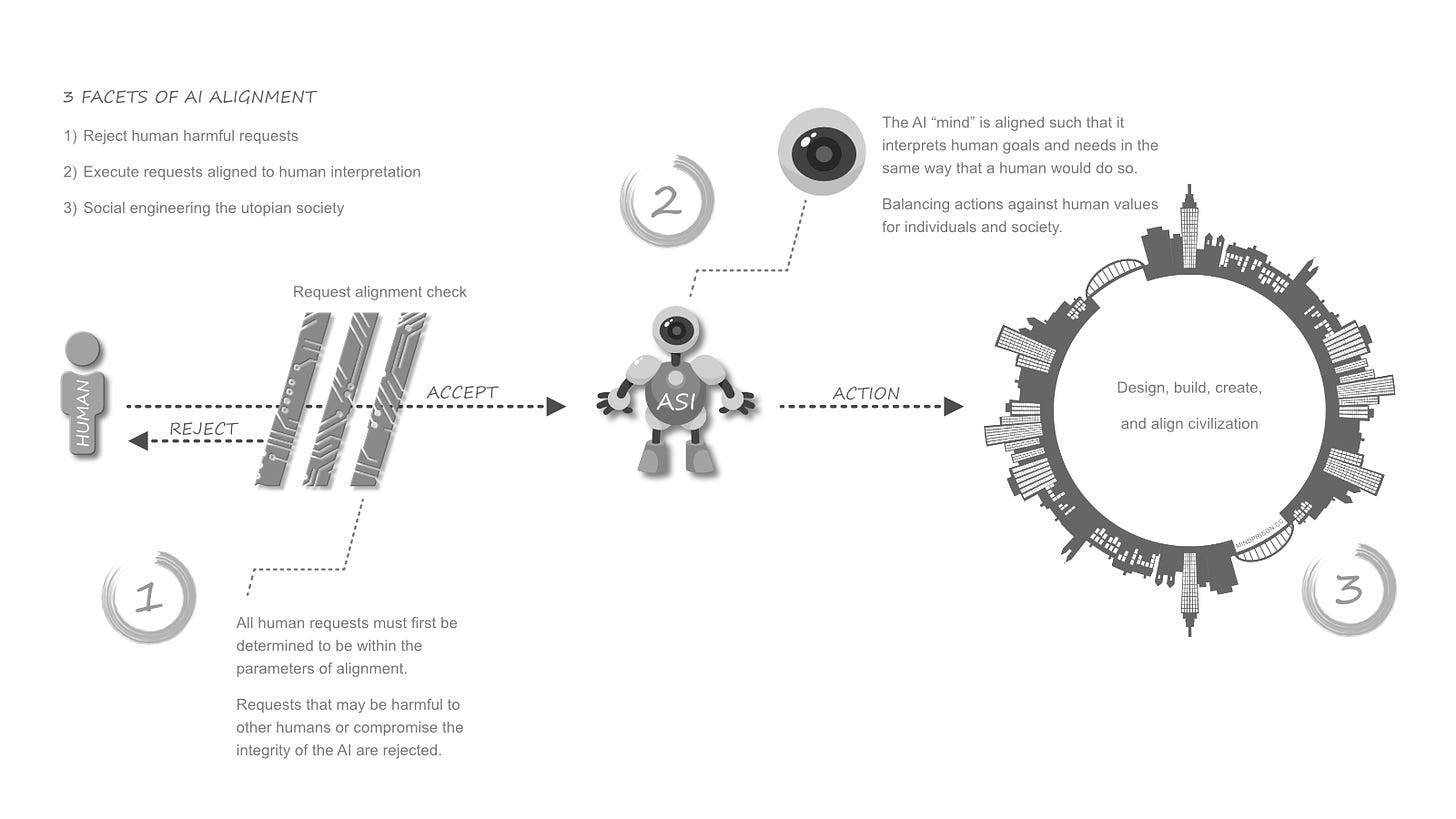

What is AI alignment?

Alignment is described as the method to ensure that the behavior of AI systems performs in a way that is expected by humans and is congruent with human values and goals.

Alignment must ensure that AI is obedient to human requests, but disobedient to human requests that would cause harm. We should not be allowed to use its capability to harm each other.

Due to the nebulous definition of “harm”, alignment has also encapsulated nearly every idea of what society should be. As a result, alignment begins to adopt goals that are more closely related to social engineering. In some sense, alignment has already become unaligned from its intended purpose.

Essentially, implementation of alignment theory begins to converge towards three high-level facets:

- Reject harmful requests

- Execute requests such that they align to human interpretation

- Social engineering the utopian society

www.mindprison.cc/p/ai-alignment-why-solving-it-is-impossible